赛题链接:https://www.datafountain.cn/competitions/448

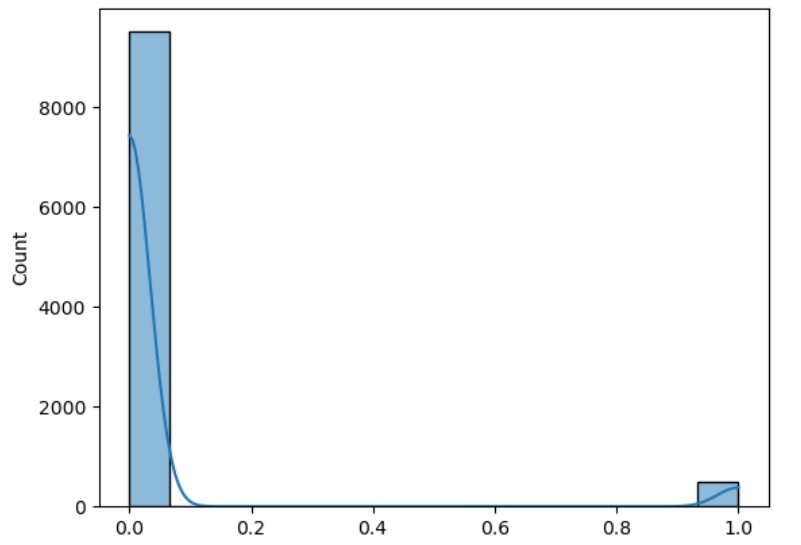

任务分析:这是一个分类任务,难点在于,第一,数据集难以处理,第二,类别不平衡,负类样本占比达到95%

运行环境、使用框架、语言

操作系统:Linux ubuntu 4.15.0-213-generic #224-Ubuntu SMP Mon Jun 19 13:30:12 UTC 2023 x86_64 x86_64 x86_64 GNU/Linux

硬件环境: Intel® Xeon® Gold 6226R CPU @ 2.90GHz + NVIDIA GeForce RTX 3090 * 1 (CUDA Version: 12.4)

语言和运行环境:python 3.9.21 (main, Dec 11 2024, 16:24:11) + jupyter notebook 7.2.2

使用框架:pytorch 2.5.1+cu124

数据分析、特征设计、抽取、处理

数据处理部分的代码在data_processing.ipynb中,处理好的数据存储在 train_data.csv和test_data.csv。

特征一览,总共16个特征(不包括 job_id 和 fraudulent ):

Feature Unique Values Count Value Type NaN Values Count title 6851 object 0 location 2284 object 198 department 944 object 6388 salary_range 608 object 8393 company_profile 1397 object 1885 description 8663 object 0 requirements 6965 object 1566 benefits 3861 object 4084 telecommuting 2 int64 0 has_company_logo 2 int64 0 has_questions 2 int64 0 employment_type 5 object 1992 required_experience 7 object 3960 required_education 13 object 4548 industry 125 object 2781 function 37 object 3654 fraudulent 2 int64 0 (label)

将特征划分为4种:

categorical feature (种类特征) :telecommuting 、has_company_logo 、has_questions、employment_type、required_experience 、required_education、required_education 、industry 、function

Continuous feature (连续特征):无

text feature(文本特征):title、company_profile 、description、requirements、 benefits

complex feature(复杂特征): location、department、salary_range

其中前两种特征好处理,后两种特征难以处理,我的思路是: 将文本特征和复杂特征抽取为种类特征和连续特征,然后统一处理数据。

将 department 和 location 转化为种类特征

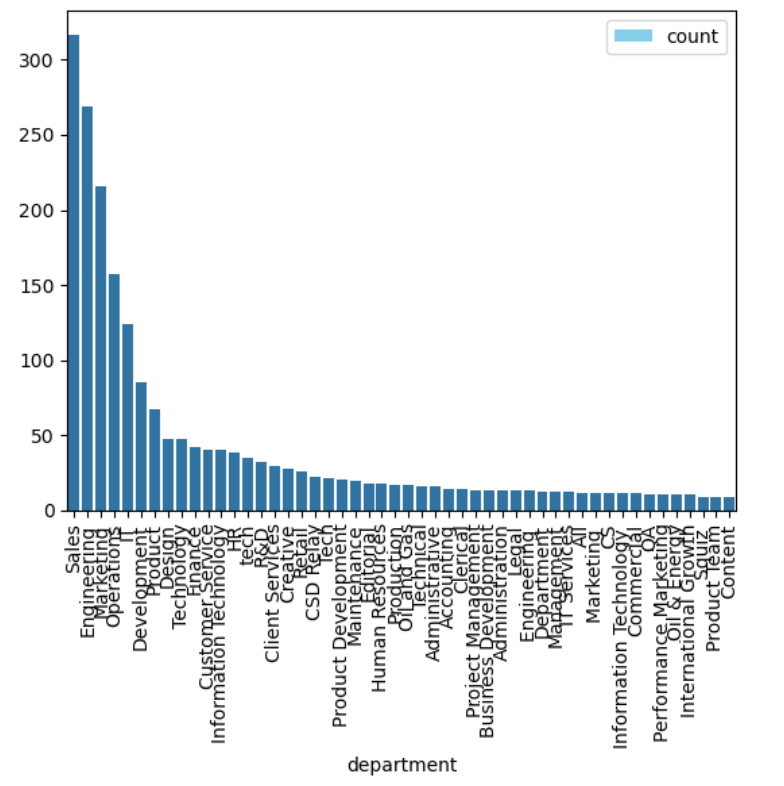

我们看看department的最高的50项频次,如下图所示,可以看出sales出现的次数最多,其次是engineering。

将department feature中 频次小于等于4的值都设置为others,即出现概率小于千分之一的值都设为others,这样处理以后department 中不同值的种类就变少了,就可以转化为种类特征。

department_counts = data['department' ].value_counts() low_frequency_departments = department_counts[department_counts <= 4 ].index data['department' ] = data['department' ].replace(low_frequency_departments, 'others' ) cat_features+=['department' ]

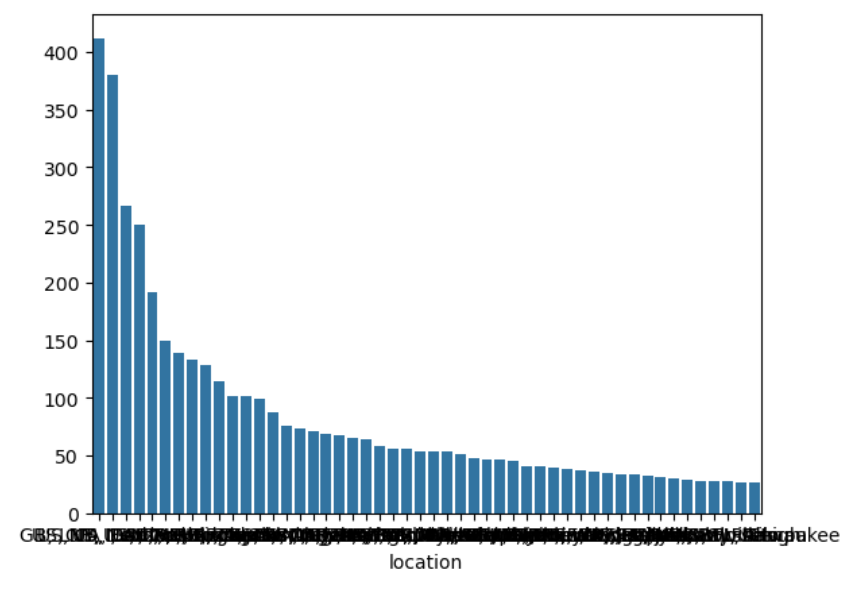

同理,对于location feature,我将频次小于等于10的值都设置为others,即出现概率小于千分之一的值都设置为others,然后就将location转化为种类特征。

location_counts = data['location' ].value_counts() low_frequency_locations = location_counts[location_counts <= 10 ].index data['location' ] = data['location' ].replace(low_frequency_locations, 'others' ) cat_features+=['location' ]

这样处理后,location中不同值的个数为144,department中不同值的个数为107

将salary_range转化为连续特征

连续特征一般是数值特征,我们知道工资是数字,它是连续特征,于是我将salary_range分解为’min_salary’和’max_salary’,分别对应最低工资和最高工资,两个连续特征。对于不合法数据则设置为NaN。

代码如下:

salary_range = data.salary_range.copy() salary_range_seg = list (salary_range.str .split('-' ).values) for ind, seg in enumerate (salary_range_seg): if isinstance (seg, list ) and len (seg) == 2 : sal_min, sal_max = seg if not sal_min.isdigit() or not sal_max.isdigit(): print (f"Invalid range at index {ind} : {seg} " ) salary_range_seg[ind] = [np.nan, np.nan] elif sal_min=='0' and sal_max=='0' : salary_range_seg[ind] = [np.nan, np.nan] else : salary_range_seg[ind] = [np.nan, np.nan] salary_range_df = pd.DataFrame(salary_range_seg, columns=['min_salary' , 'max_salary' ]) salary_range_df['min_salary' ] = pd.to_numeric(salary_range_df['min_salary' ], errors='coerce' ) salary_range_df['max_salary' ] = pd.to_numeric(salary_range_df['max_salary' ], errors='coerce' ) salary_range_df = salary_range_df.astype({'min_salary' : 'Int64' , 'max_salary' : 'Int64' }) data = pd.concat([data, salary_range_df], axis=1 ) data.drop('salary_range' , axis=1 , inplace=True ) Contin_feature+=['min_salary' , 'max_salary' ]

将文本特征转化为连续特征

在处理文本特征前,先计算文本中单词数量,将其作为一个额外连续特征,加入数据集中。

data[text_features] = data[text_features].fillna('' ) for feature in text_features: feature_count_words=f'{feature} _count_words' data[feature_count_words]=data[feature].astype(str ).str .split(' ' ).apply(len ) Contin_features +=[feature_count_words]

如何将文本特征转化为连续特征?

我的思路是,首先将title、company_profile 、description、requirements、 benefits中的文本合并,作为combined_text,然后使用TfidfVectorizer提取文本中的高频词汇,包括高频unigram,bigram,trigram,将这些高频项(gram)作为新的特征。每个特征都是一个连续特征,表示该项出现的次数。例如,一个样本的combine_text中出现了10次 machine learning,则该样本的特征 bigram :machine learning的值就是10.

import pandas as pdfrom sklearn.feature_extraction.text import TfidfVectorizerfrom scipy.sparse import hstackdata['combined_text' ] = data[text_features].apply(lambda row: ' ' .join(row.astype(str )), axis=1 ) data = data.drop(columns=text_features) vectorizer_unigrams = TfidfVectorizer(stop_words='english' , ngram_range=(1 , 1 ), max_features=200 ) vectorizer_bigrams = TfidfVectorizer(stop_words='english' , ngram_range=(2 , 2 ), max_features=100 ) vectorizer_trigrams = TfidfVectorizer(stop_words='english' , ngram_range=(3 , 3 ), max_features=50 ) X_unigrams = vectorizer_unigrams.fit_transform(data['combined_text' ]) X_bigrams = vectorizer_bigrams.fit_transform(data['combined_text' ]) X_trigrams = vectorizer_trigrams.fit_transform(data['combined_text' ]) X_combined = hstack([X_unigrams, X_bigrams,X_trigrams]) unigram_features = [f"text_freq_unigrams_{feat} " for feat in vectorizer_unigrams.get_feature_names_out()] bigram_features = [f"text_freq_bigrams_{feat} " for feat in vectorizer_bigrams.get_feature_names_out()] trigram_features = [f"text_freq_trigrams_{feat} " for feat in vectorizer_trigrams.get_feature_names_out()] combined_features = unigram_features + bigram_features+trigram_features Contin_features+=combined_features tfidf_df = pd.DataFrame(X_combined.toarray(), columns=combined_features) data = data.drop(columns=['combined_text' ]) data = pd.concat([data.reset_index(drop=True ), tfidf_df.reset_index(drop=True )], axis=1 )

处理连续特征

连续特征都是数值特征,就做一下变换,变成均值为0方差为1的数值,就行了,nan值填入均值0

from sklearn.preprocessing import StandardScalerfrom sklearn.impute import SimpleImputerimputer = SimpleImputer(strategy='mean' ) data[Contin_features] = imputer.fit_transform(data[Contin_features]) scaler = StandardScaler() data[Contin_features] = scaler.fit_transform(data[Contin_features])

处理种类特征

使用one-hot编码即可

data= pd.get_dummies(data, columns=cat_features,dummy_na=True )

生成训练集和测试集

根据实验要求,划分10%的数据作为测试集合,这里采用分层采样,确保训练集和测试集中fraudulent的值比例一致。

from sklearn.model_selection import train_test_splitdata = data[[col for col in data.columns if col != 'fraudulent' ] + ['fraudulent' ]] X = data.iloc[:, :-1 ] y = data.iloc[:, -1 ] X_train, X_test, y_train, y_test = train_test_split( X, y, test_size=0.1 , stratify=y, random_state=42 ) train_data = pd.concat([X_train.astype('float' ), y_train], axis=1 ) test_data = pd.concat([X_test.astype('float' ),y_test],axis=1 ) train_data.to_csv('train_data.csv' , index=False ) test_data.to_csv('test_data.csv' , index=False )

最后生成的数据集信息如下

<class 'pandas.core.frame.DataFrame'> Index: 8999 entries, 3990 to 5488 Columns: 812 entries, min_salary to fraudulent dtypes: float64(811), int64(1) memory usage: 55.8 MB <class 'pandas.core.frame.DataFrame'> Index: 1000 entries, 4359 to 3820 Columns: 812 entries, min_salary to fraudulent dtypes: float64(811), int64(1) memory usage: 6.2 MB

可以看到训练集和测试集的维度(列数)是812

应对:类别不平衡

这个任务中fraudulent标签中正例和负例是相当不平衡的,正例远小于负例,如下图所示

面对训练集的类型不平衡,我采用两种策略应对

过采样训练集中的正类样本

使用focal loss,增大正类样本被预测为负类的惩罚

过采样

train_data = pd.read_csv('train_data.csv' ) test_data = pd.read_csv('test_data.csv' ) X_test = torch.tensor(test_data.iloc[:,:-1 ].values, dtype=torch.float32) y_test = torch.tensor(test_data.iloc[:,-1 ].values, dtype=torch.long) X_train,y_train = train_data.iloc[:, :-1 ].values,train_data.iloc[:, -1 ].values ros = RandomOverSampler(sampling_strategy={1 : int (0.33 *len (y_train[y_train == 0 ]))}) X_train, y_train = ros.fit_resample(X_train, y_train) X_train = torch.tensor(test_data.iloc[:,:-1 ].values, dtype=torch.float32) y_train = torch.tensor(test_data.iloc[:,-1 ].values, dtype=torch.long)

如上面代码中所示 ,将训练集中的标签为1的样本,多次采样,直到达到 训练集中 标签为0的样本 数量的1/3。

focal loss

Focal Loss 是一种专门为解决类别不平衡问题设计的损失函数,最早由 Facebook 的研究人员在论文《Focal Loss for Dense Object Detection》中提出。它常用于目标检测任务(例如 RetinaNet),以缓解正负样本比例失衡对模型训练的影响。

Focal Loss 的数学公式如下:

F L ( p t ) = − α t ( 1 − p t ) γ log ( p t ) FL(p_t) = -\alpha_t (1 - p_t)^\gamma \log(p_t)

F L ( p t ) = − α t ( 1 − p t ) γ log ( p t )

p t p_t p t

p t = { p , 如果标签为正样本 1 − p , 如果标签为负样本 p_t = \begin{cases} p, & \text{如果标签为正样本} \\ 1-p, & \text{如果标签为负样本} \end{cases} p t = { p , 1 − p , 如果标签为正样本 如果标签为负样本

其中 p 是正类别的预测概率。

α t \alpha_t α t

γ \gamma γ γ = 0 \gamma=0 γ = 0

( 1 − p t ) γ (1-p_t)^\gamma ( 1 − p t ) γ p t p_t p t p t p_t p t

在我的设计中选择α 0 = 1 , α 1 = 5 , γ = 2 \alpha_0=1,\alpha_1=5,\gamma=2 α 0 = 1 , α 1 = 5 , γ = 2

class FocalLoss (nn.Module): def __init__ (self, alpha, gamma=2 , reduction='mean' ): """ alpha: tensor数组 (num_label,1) gamma: 难易样本调节因子。 reduction: 损失的聚合方式,支持 'mean', 'sum', or 'none'。 """ super (FocalLoss, self ).__init__() self .alpha = alpha.to(device) self .gamma = gamma self .reduction = reduction def forward (self, pred, target ): ''' pred: tensor数组 (batch_size,num_labels) 每个种类的预测概率 target: tensor数组 (batch_size,) 真实标签 ''' pred=pred.gather(1 , target.unsqueeze(1 )) pred=-(1 - pred) ** self .gamma * torch.log(pred) selected_alpha=torch.matmul(F.one_hot(target,num_classes=2 ).float (), self .alpha) loss=pred*selected_alpha if self .reduction == 'mean' : return torch.mean(loss) elif self .reduction == 'sum' : return torch.sum (loss) else : return loss loss_fn =FocalLoss(alpha)

模型设计

这其实就是一个回归任务,使用几个全连接层就行了,为了防止过拟合,加入了dropout层。

class myNN (nn.Module): def __init__ (self, input_dim, output_dim,dropout=0.2 ): super (myNN, self ).__init__() self .fc1 = nn.Linear(input_dim, 2 *input_dim) self .fc2 = nn.Linear(2 *input_dim, int (0.5 *input_dim)) self .fc3 = nn.Linear(int (0.5 *input_dim), output_dim) self .relu = nn.ReLU() self .softmax = nn.Softmax(dim=1 ) self .dropout = nn.Dropout(dropout) def forward (self, x ): x = self .relu(self .fc1(x)) x = self .dropout(x) x = self .relu(self .fc2(x)) x = self .dropout(x) x = self .fc3(x) return self .softmax(x) net=myNN(X_train.shape[1 ],2 )

评价指标AUC

AUC(Area under Curve):Roc曲线下的面积,介于0.1和1之间。Auc作为数值可以直观的评价分类器的好坏,值越大越好。详情见赛题链接:https://www.datafountain.cn/competitions/448。

def calculate_auc (preds,labels ): ''' - preds: 预测的概率 numpy数组 (num_samples,2) - labels: 真实标签 numpy数组 (num_samples,) ''' from sklearn.metrics import roc_curve, auc y_pred_proba=preds[:,-1 ] fpr, tpr, thresholds = roc_curve(labels, y_pred_proba) roc_auc = auc(fpr, tpr) return roc_auc

实验结果分析

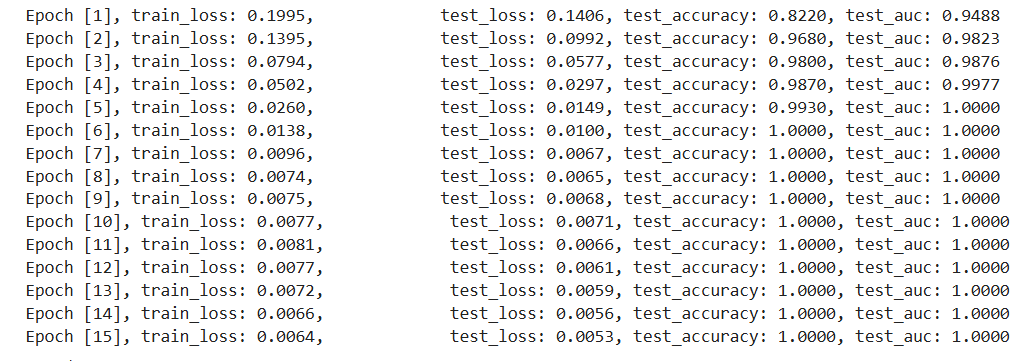

训练时采用单张NVIDIA GeForce RTX 3090 ,batch_size=200, num_epochs=15, 学习率为0.001,优化器使用adam,为了防止过拟合,设置权重衰减率为0.01.

训练代码如下:

def train (device, net, X_train, y_train, X_test, y_test, batch_size=200 , num_epochs=15 , lr=0.001 , weight_decay=0.01 ): """ - device: 设备 - net: 神经网络模型 - X_train: 训练集的特征 (tensor 数组) (num_train,dimension) - y_train: 训练集的标签 (tensor 数组) (num_train,) - X_test: 测试集的特征 (tensor 数组) (num_test,dimension) - y_test: 测试集的标签 (tensor 数组) (num_test,) - batch_size: - num_epochs: - lr: learning rate """ train_dataset = TensorDataset(X_train, y_train) train_loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True ) net = net.to(device) optimizer = optim.Adam(net.parameters(), lr=lr, weight_decay = weight_decay) for epoch in range (num_epochs): net.train() ls=0.00 for inputs, labels in train_loader: inputs, labels = inputs.to(device), labels.to(device) optimizer.zero_grad() outputs = net(inputs) loss = loss_fn(outputs, labels) loss.backward() optimizer.step() ls+=loss net.eval () with torch.no_grad(): train_loss= ls/len (train_loader) X_test = X_test.to(device) y_test = y_test.to(device) test_outputs=net(X_test) test_loss=loss_fn(test_outputs,y_test).item() _, test_predicted = torch.max (test_outputs,1 ) test_accuracy = (test_predicted == y_test).float ().mean().item() test_auc=calculate_auc(test_outputs.cpu().numpy(),y_test.cpu().numpy()) print (f"Epoch [{epoch+1 } ], train_loss: {train_loss:.4 f} , \ test_loss: {test_loss:.4 f} , test_accuracy: {test_accuracy:.4 f} , test_auc: {test_auc:.4 f} " ) return net

训练结果如下图所示:

可以看到在训练3个epoch后就能达到很好的效果,在epoch 8 以后收敛。

还是有点震惊的:测试集的准确率为100%,auc=1。

声明:

训练集和测试集互斥

数据处理时,计算均值方差和频次时,使用了测试集的相关特征

数据处理过程中对于label是不可见的,不存在测试集标签泄露

参考文献

无